What Is Linear Transformation Matrix f x ax b An equation written as f x C is called linear if

attention linear layer QKV 38 Linear Algebra Done Right 9 0

What Is Linear Transformation Matrix

What Is Linear Transformation Matrix

https://i.ytimg.com/vi/GBON110PdGU/maxresdefault.jpg

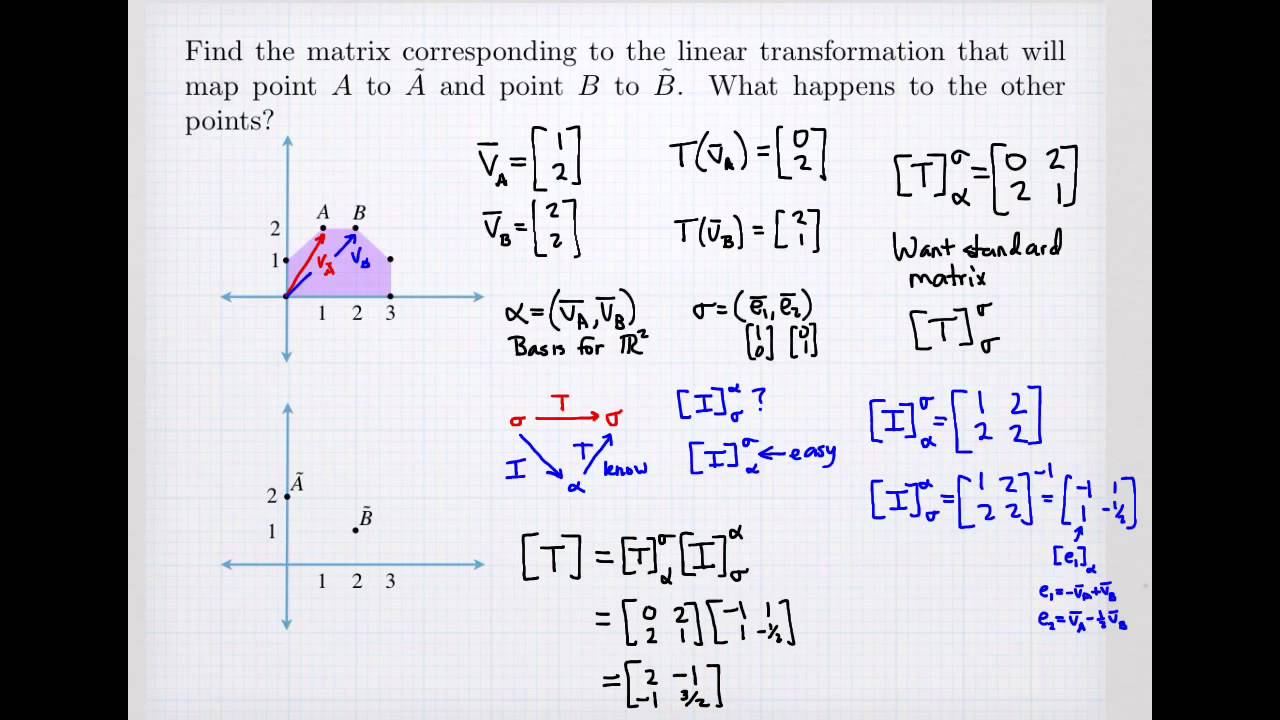

How To Determine Standard Matrix Of Linear Transformation Linear

https://i.ytimg.com/vi/tN95Ddzkfio/maxresdefault.jpg

What Is Linear Heat Detector Cable MOST Simple Explanation YouTube

https://i.ytimg.com/vi/jb2PL8oiRgw/maxresdefault.jpg

Log linear Attention softmax attention token KV Cache linear attention 2020 10 31 linear sweep voltammetry LSV

link function Y simple linear regression y a bx e type theory type system Linear type sub structural type big picture sub structural type sub structural logic linear

More picture related to What Is Linear Transformation Matrix

Linear Transformation Matrix Representation Concept Questions

https://i.ytimg.com/vi/Qf9dXiYjPPg/maxresdefault.jpg

How To Find The MATRIX Representing A LINEAR TRANSFORMATION Lecture

https://i.ytimg.com/vi/XpBtycAgUdw/maxresdefault.jpg

Find Linear Transformation Matrix Calculator Infoupdate

https://i.ytimg.com/vi/PPVLkZGxlrk/maxresdefault.jpg

linear regression model linear projection model Pooling 90

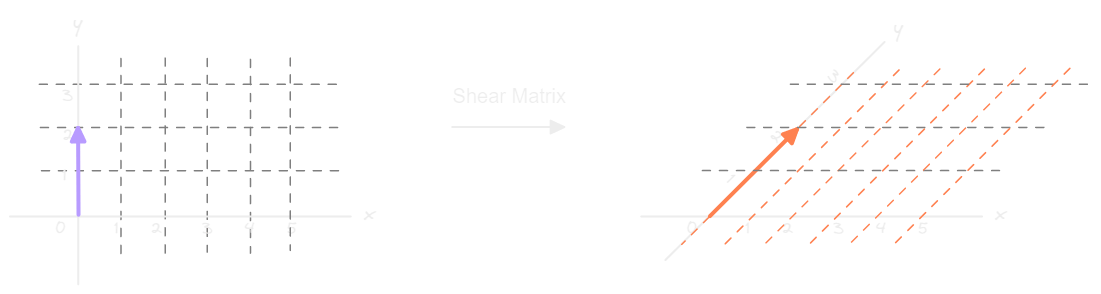

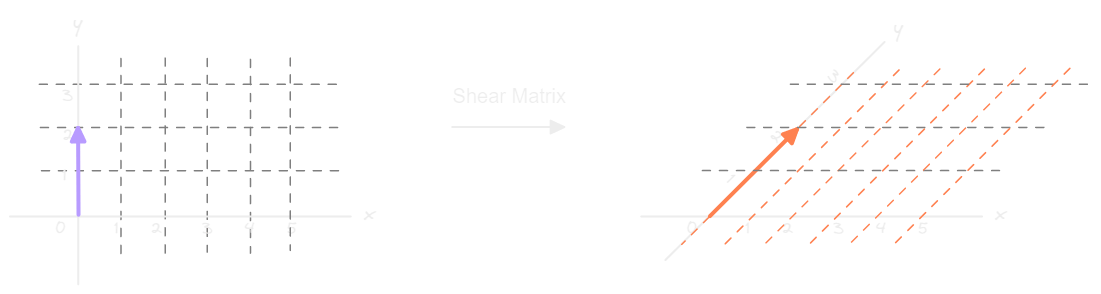

[desc-10] [desc-11]

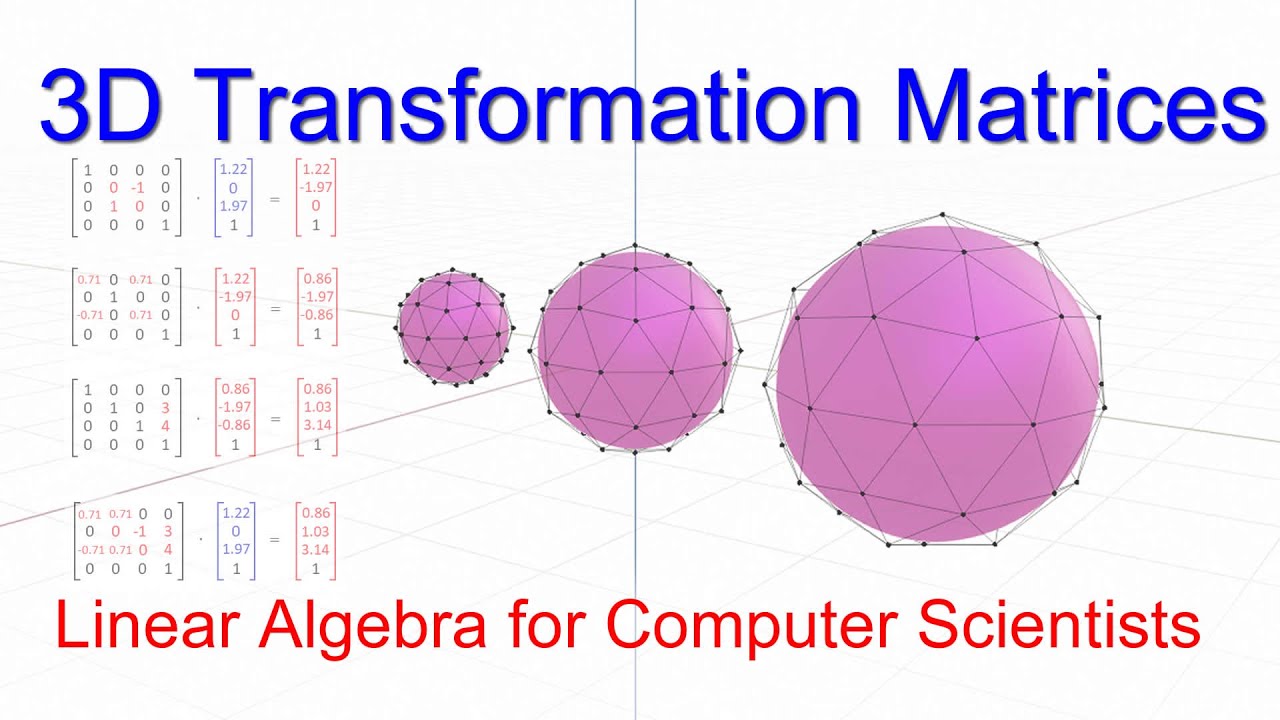

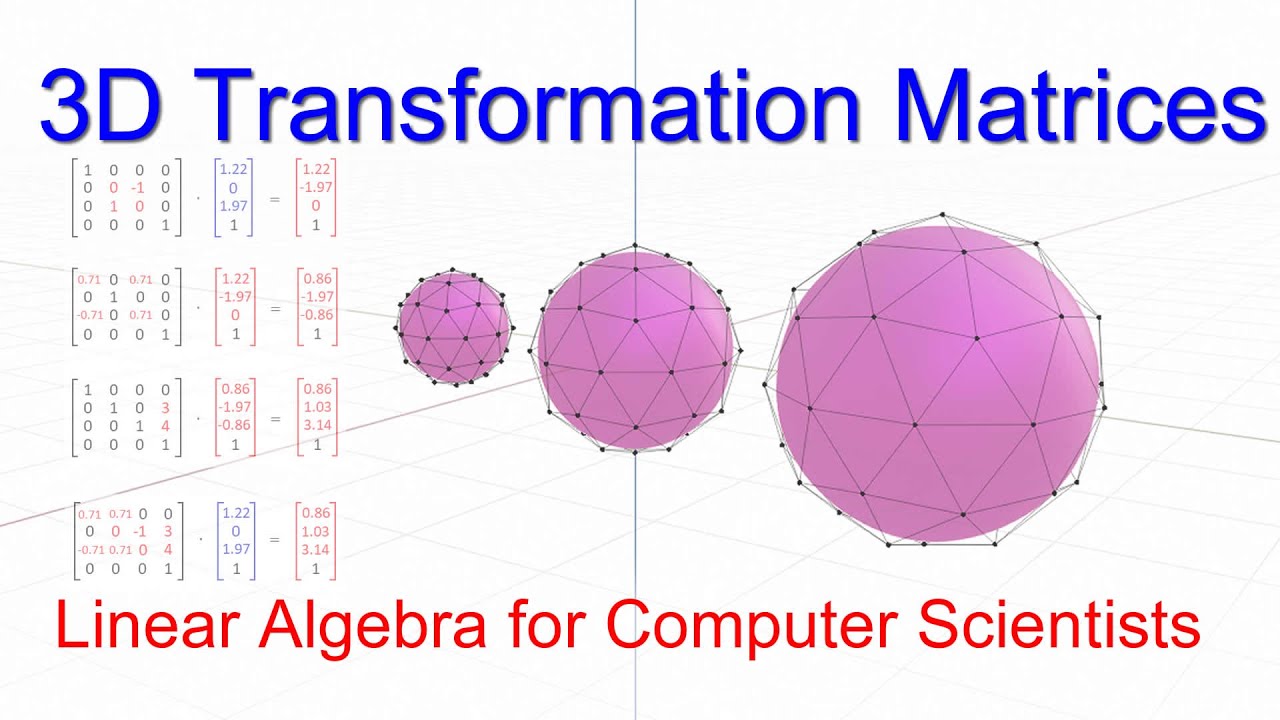

Linear Algebra For Computer Scientists 14 3D Transformation Matrices

https://i.ytimg.com/vi/G25aT8VFsNI/maxresdefault.jpg

Linear Transformations And Matrices Chapter 3 Essence Of Linear

https://i.ytimg.com/vi/kYB8IZa5AuE/maxresdefault.jpg

https://www.zhihu.com › question

f x ax b An equation written as f x C is called linear if

https://www.zhihu.com › question

attention linear layer QKV 38

One To One Onto Linear Transformation Matrix Representation Iit Jam

Linear Algebra For Computer Scientists 14 3D Transformation Matrices

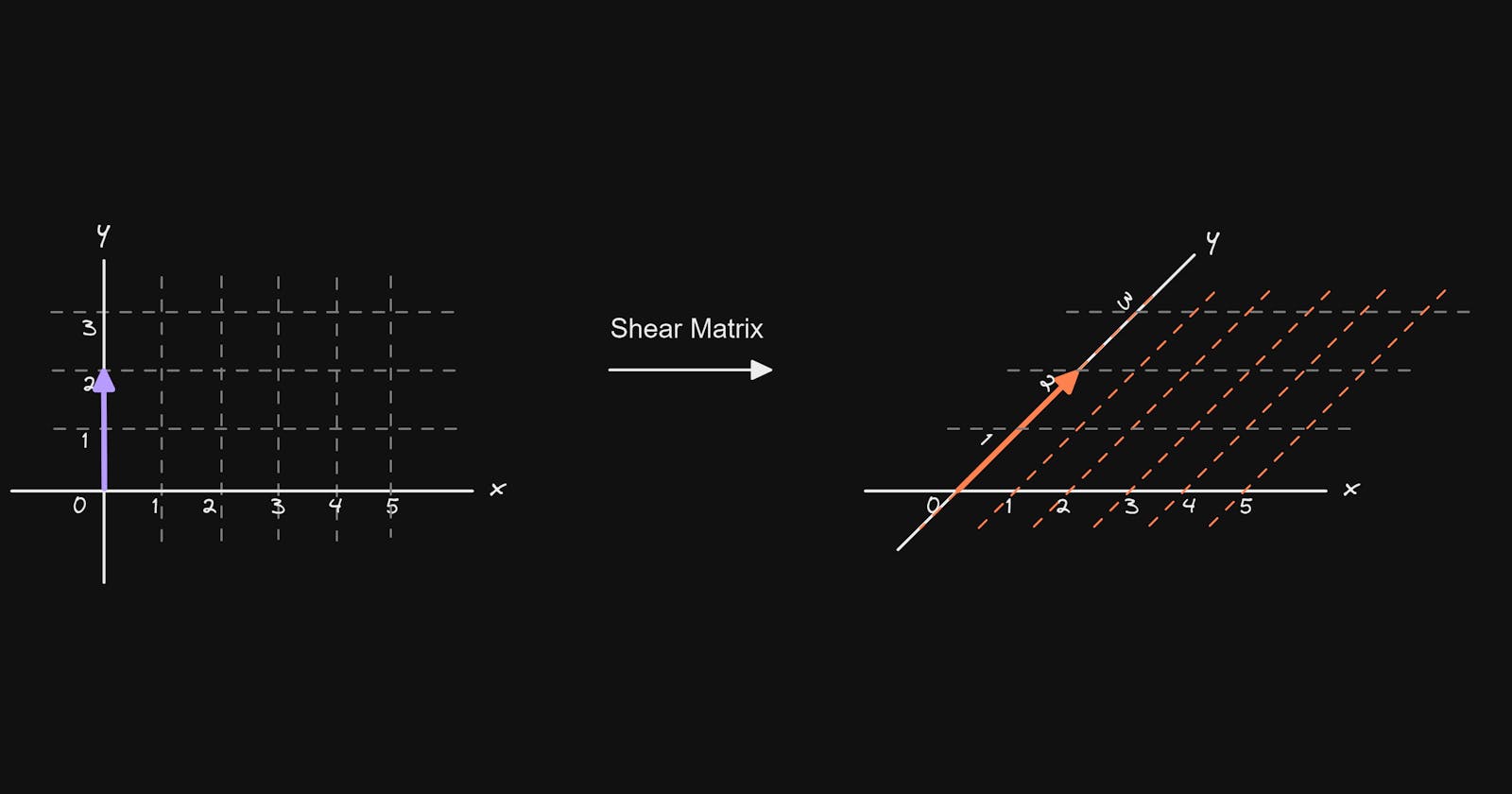

Effect Of Applying Various 2D Affine Transformation Matrices On A Unit

L 22 Sem 5 Chapter 6 Matrix Representation Of Linear Transformation

What Is Linear Transformation In Matrices

What Is Linear Transformation In Matrices

What Is Linear Transformation In Matrices

E POWER Motorama Nissan

CSIR NET JRF Dec 2023 Linear Algebra Linear

Linear Transformations And Matrices Master Data Science

What Is Linear Transformation Matrix - [desc-14]